Businesses constantly strive to empower developers and data scientists to create and deploy new applications and services quickly. To do this, they need the rapid adoption of a new, cloud-native architecture and self-service infrastructure. The environment of choice for developing and deploying such applications is often an Open Source environment using Linux and tools such as Docker and its alternatives.

According to Gartner, in 2023, more than 75% of companies use application containerization, and by 2024 the volume of applications running in a containerized environment will increase to 15%. One of the most popular and convenient container platforms is Docker — 77% of developers use it. In this article, we explore the future of container development in web applications and its relevance in 2022.

How do containers work?

The main reason for the development of containerization technology is the emergence of complex and highly loaded IT infrastructures. For their full operation, it is necessary to ensure the reliability of each element and minimize their influence on each other. We need an operating system and software to "wrap" these elements in containers and break large and monolithic IT infrastructures into many small, independent elements that interact.

The original Linux container technology is called Linux Containers or LXC. LXC is an OS-level virtualization method designed to run multiple isolated Linux systems on a single host. Containers separate applications from operating systems. This means that the user has a clean minimal Linux OS and can run all processes in one or more isolated containers. Because the operating system is separate from the containers, you can move the container to any Linux server that supports the container operating environment.

The solution was found in 2013, when the first tool for implementing containers on the Linux OS was released — Docker. In the summer of 2014, a tool for automating container management — Kubernetes — was launched.

Containers are a new level of IT infrastructure virtualization. They isolate individual processes within a single OS and run them with shared library and resource access. Thanks to containers, each individually launched application retains all the advantages of the cloud: redundancy, uninterrupted operation, scalability, and automatic management.

Containers are ideal for dynamic and highly loaded services, such as government services, large online stores, and marketplaces. With containers, a complex IT infrastructure works like one living creature. It consists of "cells" - containers that "grow" and, if necessary, "die." The health of such a system is monitored by internal "immunity" — monitoring and scheduling services that determine how many resources and running functions are needed to process all requests. In a container environment, there is no time and no need to configure each server and virtual machine separately.

A containerized application runs in an isolated environment and does not use the host operating system's memory, CPU, or disk. It guarantees the isolation of processes inside the container.

Why are developers using containers?

The very nature of containers and Docker technology make it easy for developers to collaborate with their software and dependent components across production and operational IT environments. Due to this, potential problems related to the inoperability of the code on other computers can be detected on time. Containers are used to resolve application conflicts between different environments. Indirectly, containers and Docker technology bridge the gap between developers and IT operations, allowing them to work together effectively. By adopting a containerized workflow, many customers gain the inherent DevOps continuity that previously required more complex release and build pipeline configurations. Using containers simplifies DevOps build, test, and deployment pipelines.

For an application to work effectively in containers, it is not enough to simply create a container image and run it. Ensure that the architecture of the application and container follow the basic principles of containerization:

One container - one service

A container should only do one thing - it shouldn't contain all the entities that the application depends on. Following this principle allows you to achieve greater reusability of images and to scale the application more. Only some parts of the technology stack may be used, and diluting all its parts into different containers will allow you to increase the performance of your service.

Immutability of the image

All changes inside the container must be made at the image build stage. It ensures that there is no data loss when the container is destroyed. Container immutability also makes it possible to perform parallel tasks in CI / CD systems - for example, you can run various kinds of tests simultaneously, thereby speeding up the product development process.

Container recyclability

Any container can be destroyed at any time and replaced with another one without stopping the service. The configuration of a container in the form of its image is separated from the container instance that directly performs the work. Compliance with this principle means that container rotation should be a development requirement.

Reporting

The container must have points for checking the state of its readiness (readiness probe) and viability (liveness probe) and provide logs to track the state of the application.

Controllability

The application in the container must be able to interact with the process that controls it - for example, to complete its work on a command from outside correctly. This will prevent the loss of user data due to stopping or destroying the container.

Self-sufficiency

The image with the application must have all the necessary dependencies for work - libraries, configs, etc. Services, on the other hand, do not belong to these dependencies. Otherwise, it would contradict the “one container - one service” principle.

Resource limit

The best practices for operating containers include setting resource limits (CPU and RAM): following this practice allows you to remain attentive to saving resources and respond in time to their excessive consumption.

Differences between Container, sandbox, and VM

There are three most common terms used when talking about general computer security and mobile security - containers, virtualization, and sandboxes.

A container is a running instance that encapsulates the necessary software. Containers are always created from an image and can provide ports and disk space to interact with other containers and external software. Containers can be easily destroyed or deleted and recreated. Containers do not store state.

In computer security, a sandbox can refer to two technical mechanisms: if we talk about OS, a sandbox is an environment to run the applications that restrict the full access of the application. When it comes to iOS and Android, each of them has slightly different forms of sandboxes. Each app has a list of the resources to access, which users must approve when installing the app. The sandboxes can attract hackers because if the malware escapes the sandbox, there is no limit to what the malware can do.

The most common question when choosing an application launch environment is the difference between containers and virtual machines. There is a fundamental difference between them. The container is a limited space inside the OS that uses the host system's kernel to access hardware resources. At the same time, the VM is a complete machine with all the devices necessary for its operation.

Containerization is a similar but improved concept of a virtual machine. Instead of copying the hardware layer, containerization removes the operating system layer from the standalone environment. This allows the application to run independently of the host operating system. Containerization prevents wasted resources because applications get precisely the resources they need. Here are some differences we need to know:

- Containers require significantly fewer resources to run, which positively impacts performance and budget.

Containers can only be run on the same operating system based on the host system - it means you can’t run a * Windows container on a host system with Linux (on personal devices, this limitation is bypassed using virtualization technology). However, this does not apply to different distributions of the same OS, such as Ubuntu and Alpine Linux. - Containers provide less isolation because they use the kernel of the host system, potentially creating more operational risks if you neglect security.

What is Docker and its advantages?

The main principle of working with Docker can be described as a three-word slogan: Build, Ship, Run. Each of them means a particular stage and element of the system:

- Build. It means creating a Dockerfile. It is the instruction according to which the whole "ship" is built and sent to "sail." In the file, you describe the image of the future product and its operation and creation principles.

- Ship. The next step is Docker Image. It is a ready-made image for building and running a container. If you compare a Dockerfile with a room plan, a Docker Image is a design project with an image of the future renovation, electrical and plumbing wiring diagrams, etc.

- Run. At the last stage, Docker Container appears — an image already running. And if there was a design project earlier, then this is a space ready for occupancy.

Here are three main features that DevOps professionals enjoy when using Docker:

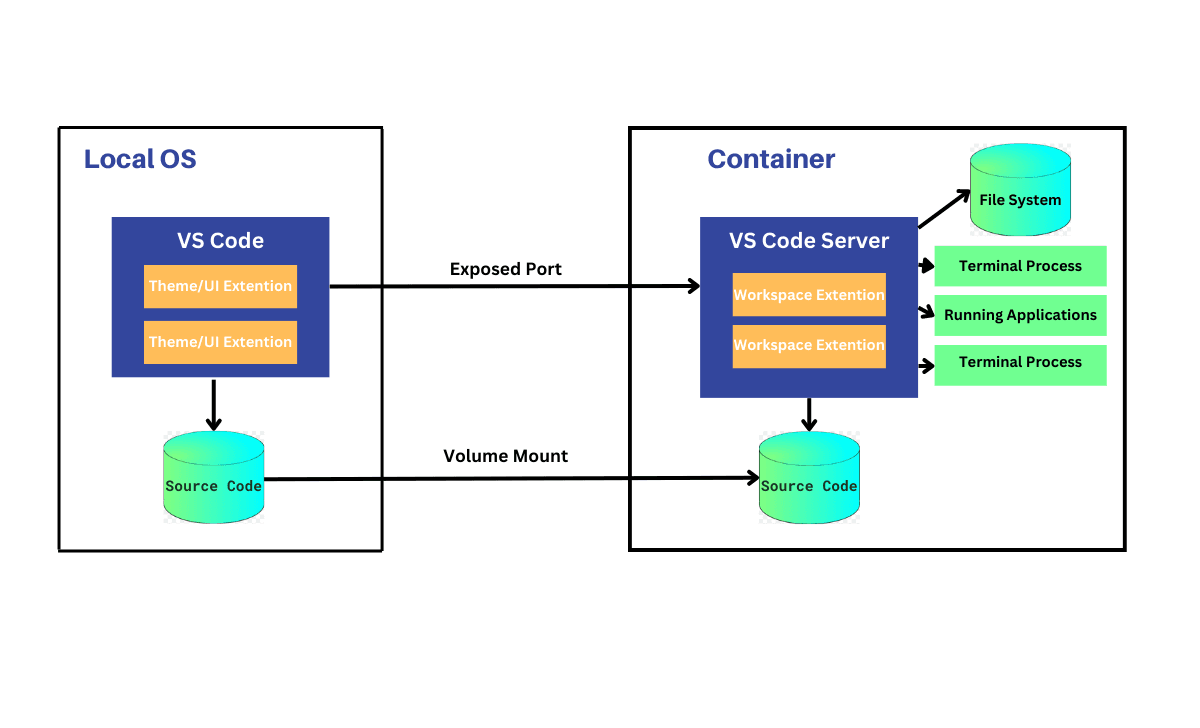

Build container images: You can package, build, and run container images with Docker Compose and Docker Build. Integrate development tools like VS Code, CircleCI, and GitHub into your development process.

Image Sharing: You can easily share container images with access to Docker Hub across your organization or team.

Running Containers: You can deploy and run multiple applications across your environments. Deploy containers in different languages independently to reduce the risk of errors due to misunderstandings. This process is done through Docker Engine.

The main advantages of Docket include the following:

- Speeding up the development process

The developers don’t need to install third-party applications in the system, such as PostgreSQL, Redis, and Elasticsearch, they can run them in containers. Docker also allows you to run different versions of the same application simultaneously. You must manually migrate data from an old version of Postgres to a new one. The same happens in microservice architecture when you want to create a microservice with a new version of third-party software. Loading two different versions of the same application on the host OS is challenging. In such conditions, Docker containers are perfect, you get an isolated environment for the application and third-party services.

- Convenient application encapsulation

Most programming languages, frameworks, and all operating systems have package managers. If your application uses a native package manager, creating a port for another system may be difficult. Docker provides a single image format for distributing applications between operating systems and cloud services. Your application is complete, has all the necessary dependencies, and is ready to run.

- Same behavior on the local machine as well as dev/staging/production servers

Docker cannot always guarantee the same behavior because there is always a human factor. However, the probability of error due to different versions of operating systems or dependencies is minimized. With the right approach to creating a Docker image, your application will use it with the appropriate OS version and necessary dependencies.

- ** Simple and clear monitoring**

Thanks to Docker, you can monitor the logs of all running containers. You don’t need to remember where your application and its dependencies write logs and create custom hooks to configure this process. You can integrate an external logging driver and monitor your application's logs in one place.

- Ease of scaling

By design, Docker forces you to use basic principles: configuration via environment variables, use of TCP/UDP, etc. If your application is designed correctly, it is ready to scale not only on Docker.

- Platform support

Linux is the native platform for Docker, so it supports Linux kernel features. However, Docker can be run on macOS and Windows. The only difference is that on recent OSes, Docker is encapsulated in a tiny virtual machine. However, Docker for macOS and Windows has improved significantly: it is easy to use and very similar to the native tool.

Is Docker still relevant in 2023?

Just as containers streamline cargo transportation, Docker containers help operate faster and more efficient CI/CD processes. It's not just another technology trend but a paradigm supported by giants like PayPal, Visa, Swisscom, General Electrics, Splink, and more.

Thanks to Docker, it has become much easier to develop and maintain applications and move them from server to server. For example, move an application from the cloud to a server at home, to a VPS host, and so on. It gives developers a number of advantages. Docker containers are minimalistic and provide compatibility. Containers are building blocks with easily interchangeable parts to speed up development.

Containers solve a lot of problems, but there are also disadvantages. Programs in a container are more well isolated from the host environment than they are in a virtual machine. And the container engine takes up resources on the server, so programs run slower than on bare metal.

Containers don't store anything, they are immutable read-only modules. They are loaded and run from an image that describes their contents. By default, this image is immutable and does not save its state. When a container is erased from memory, it disappears forever. To save the state, you need to develop a special solution like a virtual machine.

The growth in popularity and number of containers has changed how applications are built - instead of monolithic stacks, microservices running in the cloud networks have become more common. It means some users needed tools to orchestrate and manage large groups of containers.

Recently, a new type of cloud service has appeared, where you can run applications and databases and even work with Docker containers without Docker itself. For example, Fly.io, Stackpath, Deno.land, Vercel.app. Perhaps the future belongs to such services.

Docker Alternatives - pros and cons of containers

The most famous Docker alternative is Kubernetes. It is a container management tool that automates deployments. Kubernetes is an open-source portable platform developed by Google and now managed by the Cloud Computing Foundation. It helps to update apps easier and faster without any downtime. It schedules the containers in the cluster and also manages the workload.

Kubernetes has two other names - "k8s" and "Kube." This orchestration platform automates many manual processes, such as deploying, managing, and scaling containerized applications.

Its main features include:

Automation of manual processes - simply describe the desired state with Kubernetes, which will push the existing change to the desired state.

Load Balancing – Kubernetes is suitable for load balancing if there is more traffic to the container. It distributes network traffic and ensures deployment stability.

Self-healing is one of Kube's best features. It restarts faulty containers, replaces them, and destroys those that don't match a user-defined pattern.

Storage Orchestration – users can automatically mount the storage system of their choice using Kubernetes.

Kubernetes is generally best for complex and enterprise development projects and may be redundant for smaller-scale projects. If you don't need the extra scalability of Kubernetes, using it justifies the additional cost and complexity.

Among other popular containers, we can name the following:

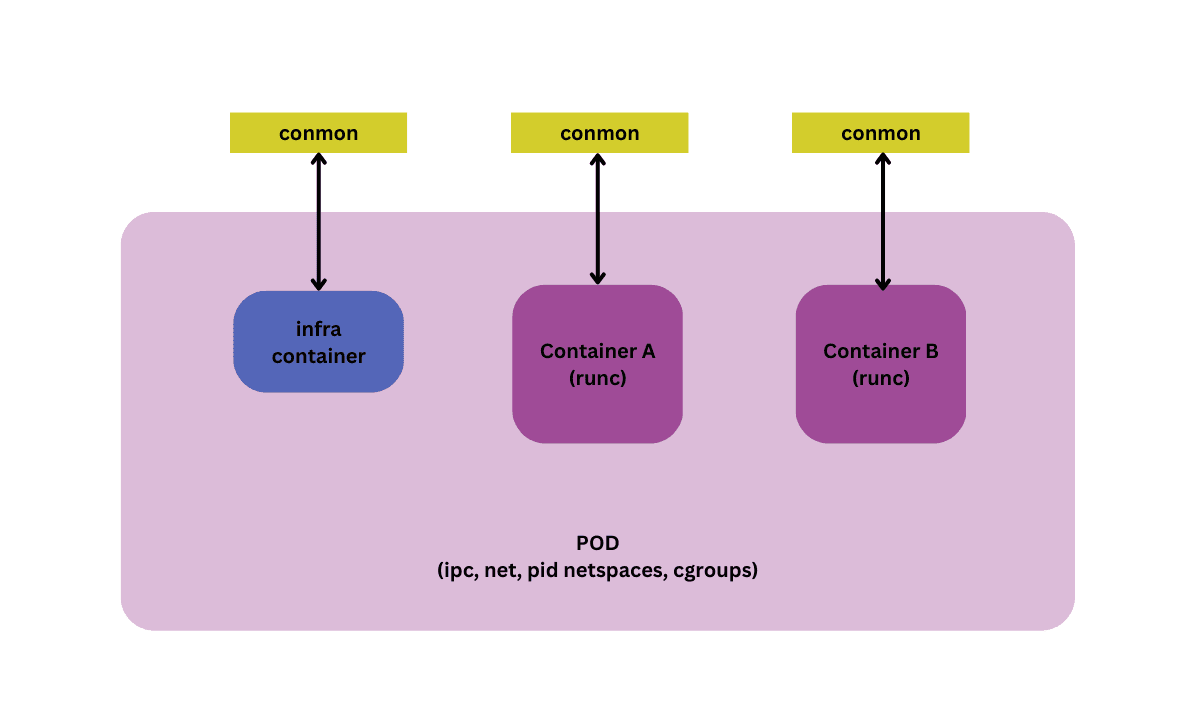

Podman (Pod Manager) is an open-source container that is a little more complicated than Docker, but it is designed following the “Unix philosophy.”

Another feature of Podman that has yet to be available in Docker is the ability to create and run pods (pods) - groups of containers deployed together. Pods are also the smallest execution unit in Kubernetes, making it easier to migrate to Kubernetes if needed.

It is a command line utility with similar commands to Docker. However, it does not require additional service and can work without root access rights. By default, it uses crun as the Container Runtime.

The ability to work with non-root containers leads to several peculiarities:

– all Podman files (images, containers, etc.) of users with root access are stored in the /var/lib/containers directory, without root access - in ~/.local/share/containers

– non-root users, by default, cannot use privileged ports and cannot fully use some commands

For installation, you need to use the official guide - Podman Installation Instructions, which contains instructions for Linux, Windows, and Mac. Containers require Linux kernel features, so they run natively under Linux, almost natively under recent versions of Windows, thanks to WSL2, and not natively under Mac.

Containerd is another container that runs as a daemon on Linux, which acts as an interface between your container engine and container runtime. It uses the listed LVM2 features, supports the OCI container format, and can run on any ecosystem.

Docker started developing the containerd project in 2014 as a low-level manager for the Docker engine but gave it away to the community. The development was taken over by the Cloud Native Computing organization, which in 2019 released it as an open standard for any cloud platform and different operating systems.

The LVM2 logical volume manager can merge or split block devices into synthetic ones. In this case, the volume is considered an abstraction. A 1 TB block device can be split into a thousand synthetic 5 GB devices. It is possible to create snapshots in another synthetic device and share these blocks between related devices with copy-on-write semantics.

Buildah is another alternative to Docker for building images. The tool is also developed by Red Hat and is often used in conjunction with Podman. The latter uses part of Buildah's functionality to implement its build subcommand.

If you need fine-grained control over images, you can use the Buildah CLI tool. At the time of writing, Buildah works on several Linux distributions but is not supported on Windows or macOS.

The images that Buildah creates comply with the OCI specification and work just like those built with Docker. Buildah can also produce images using an existing Dockerfile or Container file, making migration much easier. Buildah allows you to use Bash scripts that bypass the limitations of Dockerfiles, making it easier to automate the process.

Like Podman, Buildah follows the fork-exec model, which does not require a central daemon or root access. One advantage of using Buildah over Docker is the ability to commit many changes at the same level. It is a requested feature among container users. With Buildah, you can create an empty container image that only stores metadata. This allows you to add only the required packages to the image. The end result is smaller than its Docker equivalent.

Conclusion

Containerization helps developers and organizations build, ship, and run applications — containers contain everything needed to run an application on a container technology system. You can use any container alternative to Docker if they follow industry standards for container formats and runtimes.

We can say that containerization gives us the opportunity to very flexibly adapt the necessary settings to our applications while not touching the global settings of our server. If programs are already running there, we can start new ones, isolating them from each other and thus keep all systems operational.

This technology is now very popular among companies and requires basic knowledge and the ability to work with it, so consider using containers for your projects, even small ones. In any case, the advantage of using containers should be decided based on the task. For some tasks, you can't do without Docker and its alternatives, and for others - you don’t need containers at all.

If you are interested to know more about the containers and don’t know where to start, the RabbitPeppers team can help you implement and choose the container solutions depending on your needs and business.